LOF#

- class frlearn.data_descriptors.LOF(dissimilarity: str = 'boscovich', k: int = <function log_multiple.<locals>._f>, nn_search: ~frlearn.neighbours.neighbour_search_methods.NeighbourSearchMethod = <frlearn.neighbours.neighbour_search_methods.KDTree object>, preprocessors=(<frlearn.statistics.feature_preprocessors.IQRNormaliser object>, ))#

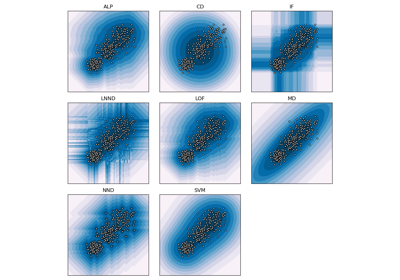

Implementation of the Local Outlier Factor (LOF) data descriptor [1].

- Parameters:

- dissimilarity: str or float or (np.array -> float) or ((np.array, np.array) -> float) = ‘boscovich’

The dissimilarity measure to use.

A vector size measure

np.array -> floatinduces a dissimilarity measure through application toy - x. A float is interpreted as Minkowski size with the corresponding value forp. For convenience, a number of popular measures can be referred to by name.The default is the Boscovich norm (also known as cityblock, Manhattan or taxicab norm).

- kint or (int -> float) or None = 2.5 * log n

How many nearest neighbours to consider. Should be either a positive integer, or a function that takes the target class size

nand returns a float, or None, which is resolved asn. All such values are rounded to the nearest integer in[1, n].- preprocessorsiterable = (IQRNormaliser(), )

Preprocessors to apply. The default interquartile range normaliser rescales all features to ensure that they all have the same interquartile range.

Notes

The scores are derived with 1/(1 + lof).

kis the principal hyperparameter that can be tuned to increase performance. Its default value is based on the empirical evaluation in [2].References

[1]- class Model#